RUMI: Exploring Ocean Data in New Models

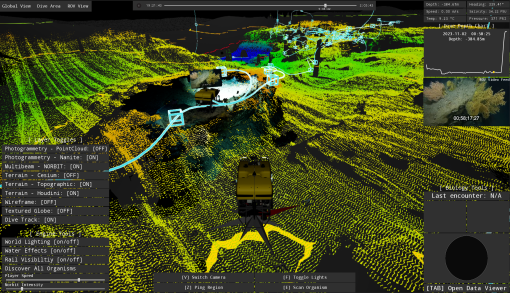

The Realtime Underwater Modeling and Immersion (RUMI) project is a new initiative aimed at transforming video and imagery from ROV dives into interactive, explorable terrains. To do this, RUMI integrates time-synced hydrographic surveys, deep-sea ROV video, high-resolution photogrammetry models from the Widefield Camera Array, control room audio, dive logs, and expert taxonomic analysis into a digital diorama powered by Unreal Engine.

We are excited by the potential of this new visualization method, which incorporates dive data for both scientific and educational audiences. Currently, the project focuses on building a proof-of-concept prototype and core functionalities to showcase the possibilities of future media experiences designed to inspire curiosity about our oceans. Unlike imagined underwater worlds, RUMI connects audiences to authentic expedition data.

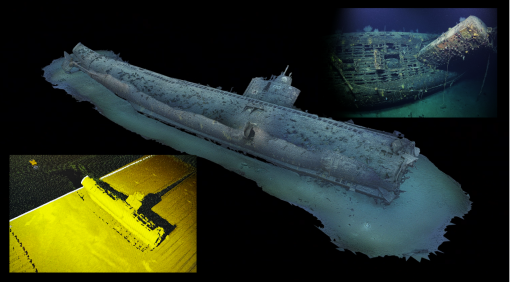

This project development has been funded by NOAA Ocean Exploration building from technology developed with the Sexton Corporation through funding from the Office of Naval Research. The prototype data for RUMI was collected during the 2023 Ocean Exploration through Advanced Imaging expedition (NA156), an Office of Naval Research-funded cruise to test the Widefield Camera Array mounted on the remotely operated vehicle Hercules. Imagery from these dives, combined with near-seafloor sonar data from the vehicle-mounted Norbit sonar, enables the creation of three-dimensional models of each site. A customized software package for the Norbit sonar, developed by Navigator Dr. Kristopher Krasnosky, plays a crucial role in Nautilus operations, adding a rich data layer beyond the view of the cameras.

The project has so far focused on developing standard operating procedures and programmatic architecture. This season, the team looks forward to expanding on lessons learned by gathering new data sets from deep-sea exploration dives. With this data, we will create, iterate, and refine education-focused immersive experiences, connecting learners to the deep sea through unique and dynamic experiences.

The prototype data for RUMI was collected during 2023’s Ocean Exploration through Advanced Imaging expedition (NA156), an Office Of Naval Research-funded cruise to test the Widefield Camera Array mounted on remotely operated vehicle Hercules. Imagery from the dives combines with near-seafloor sonar data from the vehicle-mounted Norbit sonar to make three-dimensional models of each site. A customized software package for the Norbit sonar developed by Navigator Dr. Kristopher Krasnosky is key in Nautilus operations and creates a rich data layer beyond the view of the cameras.

The project so far has focused work on the standard operating procedures and programmatic architecture development. This season the team looks forward to expanding on lessons learned to gather new data sets from deep sea exploration dives. With these data, we will create, iterate, and refine education-focused immersive experiences that connect learners with the deep sea through unique and dynamic experiences.

Stay tuned as we continue to test this new technology to bring ocean exploration (in 3D) to the whole world!